O (N log N) 복잡성-선형과 유사합니까?

그래서 사소한 질문을해서 묻히게 될 것 같은데 뭔가 좀 헷갈려요.

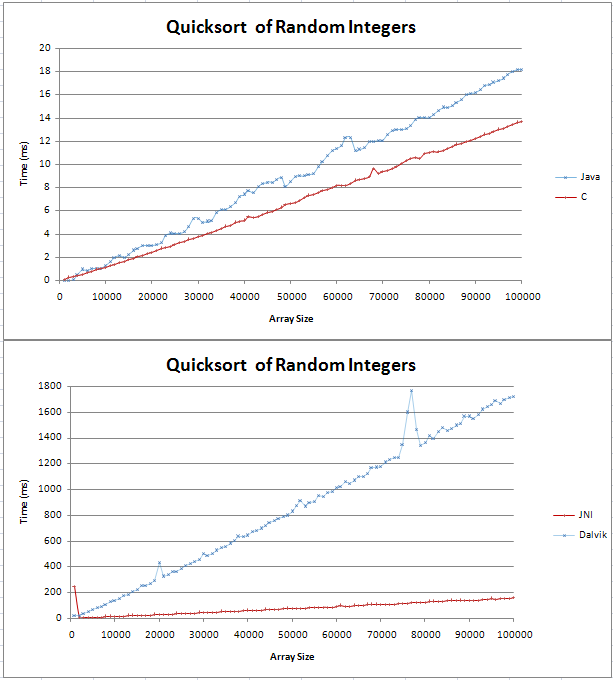

Java와 C로 퀵 정렬을 구현했으며 몇 가지 기본적인 비교를 수행했습니다. 그래프는 2 개의 직선으로 나 왔으며 C는 100,000 개의 임의의 정수를 통해 Java보다 4ms 더 빠릅니다.

내 테스트 용 코드는 여기에서 찾을 수 있습니다.

(n log n) 선이 어떻게 생겼는지 확신 할 수 없었지만 직선이라고 생각하지 않았습니다. 나는 이것이 예상 된 결과이고 내 코드에서 오류를 찾으려고하지 않아야한다는 것을 확인하고 싶었습니다.

나는 공식을 엑셀에 집어 넣었고 10 진법의 경우 처음에는 꼬임이있는 직선 인 것 같습니다. log (n)과 log (n + 1)의 차이가 선형 적으로 증가하기 때문입니까?

감사,

Gav

그래프를 더 크게 만들면 O (n logn)이 직선이 아니라는 것을 알 수 있습니다. 그러나 예, 선형 동작에 매우 가깝습니다. 그 이유를 알아 보려면 아주 큰 수의 로그를 취하십시오.

예 (10 진수) :

log(1000000) = 6

log(1000000000) = 9

…

따라서 1,000,000 개의 숫자를 정렬하기 위해 O (n logn) 정렬은 measly factor 6을 추가합니다 (또는 대부분의 정렬 알고리즘이 base 2 로그에 의존하기 때문에 조금 더). 끔찍하지 않습니다.

사실,이 로그 요인이다 그래서 크기의 대부분의 주문에 대한 설립 O (N logn) 알고리즘은 선형 시간 알고리즘을 능가하는 것이 매우 작은. 눈에 띄는 예는 접미사 배열 데이터 구조의 생성입니다.

A simple case has recently bitten me when I tried to improve a quicksort sorting of short strings by employing radix sort. Turns out, for short strings, this (linear time) radix sort was faster than quicksort, but there was a tipping point for still relatively short strings, since radix sort crucially depends on the length of the strings you sort.

FYI, quicksort is actually O(n^2), but with an average case of O(nlogn)

FYI, there is a pretty big difference between O(n) and O(nlogn). That's why it's not boundable by O(n) for any constant.

For a graphical demonstration see:

For even more fun in a similar vein, try plotting the time taken by n operations on the standard disjoint set data structure. It has been shown to be asymptotically n α(n) where α(n) is the inverse of the Ackermann function (though your usual algorithms textbook will probably only show a bound of n log log n or possibly n log* n). For any kind of number that you will be likely to encounter as the input size, α(n) ≤ 5 (and indeed log* n ≤ 5), although it does approach infinity asymptotically.

What I suppose you can learn from this is that while asymptotic complexity is a very useful tool for thinking about algorithms, it is not quite the same thing as practical efficiency.

- Usually the O( n*log(n) ) algorithms have a 2-base logarithmic implementation.

- For n = 1024, log(1024) = 10, so n*log(n) = 1024*10 = 10240 calculations, an increase by an order of magnitude.

So, O(n*log(n)) is similar to linear only for a small amount of data.

Tip: don't forget that quicksort behaves very well on random data and that it's not an O(n*log(n)) algorithm.

Any data can be plotted on a line if the axes are chosen correctly :-)

Wikipedia says Big-O is the worst case (i.e. f(x) is O(N) means f(x) is "bounded above" by N) https://en.wikipedia.org/wiki/Big_O_notation

Here is a nice set of graphs depicting the differences between various common functions: http://science.slc.edu/~jmarshall/courses/2002/spring/cs50/BigO/

The derivative of log(x) is 1/x. This is how quickly log(x) increases as x increases. It is not linear, though it may look like a straight line because it bends so slowly. When thinking of O(log(n)), I think of it as O(N^0+), i.e. the smallest power of N that is not a constant, since any positive constant power of N will overtake it eventually. It's not 100% accurate, so professors will get mad at you if you explain it that way.

The difference between logs of two different bases is a constant multiplier. Look up the formula for converting logs between two bases: (under "change of base" here: https://en.wikipedia.org/wiki/Logarithm) The trick is to treat k and b as constants.

In practice, there are normally going to be some hiccups in any data you plot. There will be differences in things outside your program (something swapping into the cpu ahead of your program, cache misses, etc). It takes many runs to get reliable data. Constants are the biggest enemy of trying to apply Big O notation to actual runtime. An O(N) algorithm with a high constant can be slower than an O(N^2) algorithm for small enough N.

log(N) is (very) roughly the number of digits in N. So, for the most part, there is little difference between log(n) and log(n+1)

그 위에 실제 선형 선을 그려 보면 작은 증가를 볼 수 있습니다. 50,0000의 Y 값은 100,000의 1/2 Y 값보다 작습니다.

거기에 있지만 작습니다. 이것이 O (nlog (n))이 좋은 이유입니다!

참고 URL : https://stackoverflow.com/questions/962545/on-log-n-complexity-similar-to-linear

'development' 카테고리의 다른 글

| 만드는 방법 (0) | 2020.10.27 |

|---|---|

| find 명령에 대한 exec 매개 변수에서 파이프를 어떻게 사용합니까? (0) | 2020.10.27 |

| PHP에서 정적 변수 / 함수를 언제 사용합니까? (0) | 2020.10.27 |

| TextBox에서 Enter 키 캡처 (0) | 2020.10.27 |

| Java GC : 왜 두 개의 생존 지역입니까? (0) | 2020.10.27 |